Search is continually evolving. We know this. We know search changes and we know that Google like to keep all of us on our toes in understanding what latest activities lead to hitting that desirable position #1.

You may have seen my thoughts last month which looked at if Voice Search is really taking over. In short, we agreed that it isn't. Not to the extent of some predictions anyway. There's a feeling I have about Voice Search that means it's all a bit gimmicky, and whilst certain situations would certainly benefit from the evolving tech I can't quite bring myself to see every situation benefiting from this tech, like often predicted.

There is however one bold upcoming search prediction which I can wholeheartedly get behind. That prediction is the rise of Visual Search.

Now let me be clear, I do not for a second believe this will ever eclipse text search. This is merely a fantastic alternative which could change the way we use apps whilst out and about or in the moment. So let's get down to it.

What is Visual Search?

Visual search is pretty much what it sounds like. You have an image and a search engine is able to show you similar images. In some cases it may be able to find stores and purchase points in other cases. This is different to the existing Image Search which you're likely more than familiar with. Where image search takes a keyword and presents you with images as your results, visual search is able to identify key components of a photograph and recognise those same components in other photographs on the web.

The challenge with this technology is not only the machine learning required in order to understand colours, shapes, sizes and patterns, it's having suitably labelled and optimised images available online. We'll come back to what that actually means very shortly...

How can visual search be used?

This is ultimately the defining question which will determine if visual search not only survives its introductory years, but thrives in them. Let's take this example of going to a friends house and falling in love with their mug, which they've kindly used to give you a perfect brew (which is probably unlikely to happen in your day to day life, but the object was a) nearest to me and b) it allows me to show off a mug, so let's go with it!)

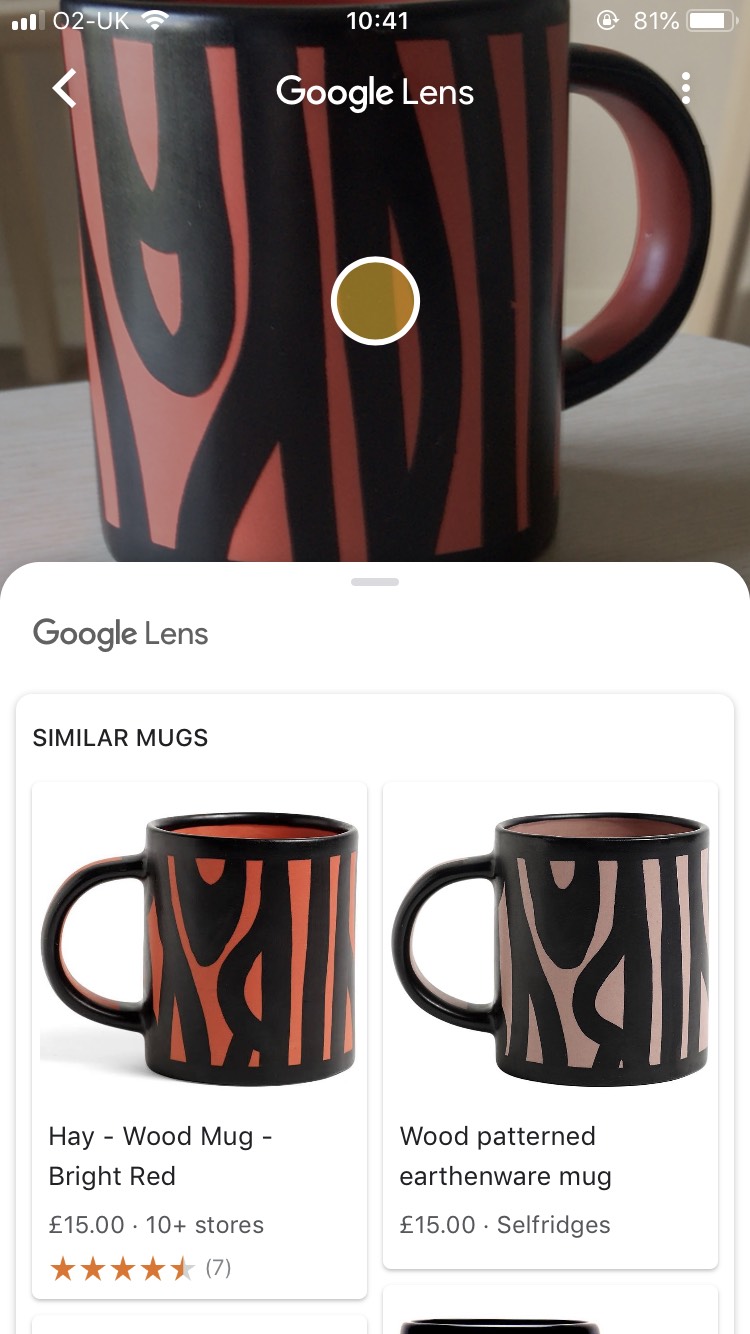

You open Google Lens, you aim the camera at the mug and this is what you see:

Now what? Now you tap on the object and this is what you see:

Suddenly you can purchase the exact same mug, so when you're returning the favour of the perfect brew your dear friend will be either a) confused as to why and how you stole their mug or b) impressed with your immaculate taste.

So, admittedly, photographing mugs might not be the best use of Google Lens but what this example does show is the power and the speed in which you can go from no knowledge about a product to purchasing that exact same product. In a retail environment this provides immense power to the customer, not only could you find similar items you can also perform price comparisons there and then.

I'm a big believer in technology only working and lasting the test of time when it adds genuine value to our day to day life, and I'm a big believer in the potential of visual search. I honestly feel this has a stronger chance of survival than most other search predictions. The beauty of the tech isn't that it's just about search engines as Pinterest also have this exact same feature, and naturally I took some photos of my trainers to illustrate this point:

A pretty specific angle to take this photo...

Bam! Results showing the same shoes (but different colours) with correct model and brand names as suggested topics in the exact same angle. Believe me, this tech is in its baby steps but it is already sophisticated.

What can you do to prepare for this?

Traditional image techniques are still relevant here, you should be ensuring you focus efforts on alt-tags, metadata and schema markup. Schema markup and metadata are particularly important, with nearly no text being involved in the visual search, this type of data could be one of very few sources which Google bots crawl for information.

This is undoubtedly a tedious task, but successful time spent on inputting metadata can be the difference between you or your competitor's webstore appearing on the visual search results.

The future of visual search

This is the interesting part, and it falls back to the usability of the technology. Where do we go from here? Honestly, it's going to be slow. Technology needs to continue to develop as does user behaviour. We need the tech to add real value and I have one example which could cement visual search as part of our daily or weekly lives.

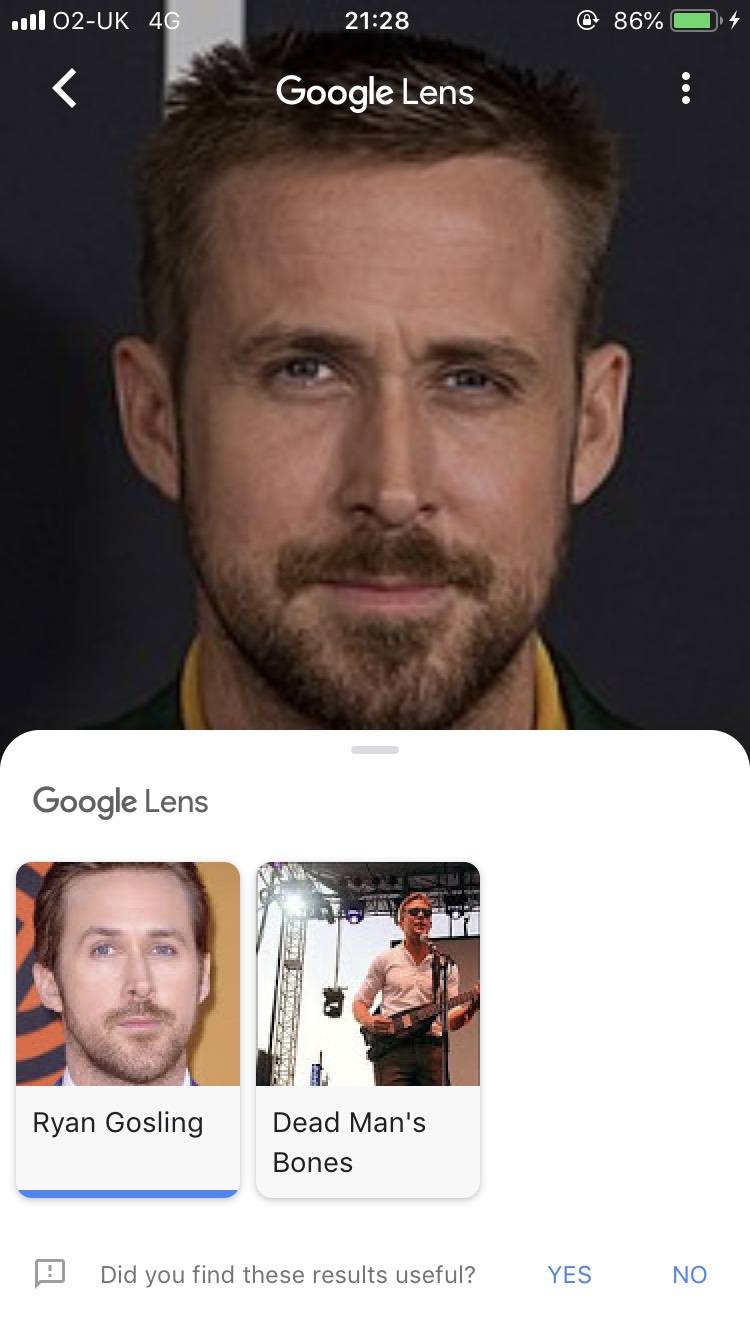

Years ago, when Shazam was booming as groundbreaking technology, myself and friends spoke about having a visual movie version. We would get frustrated at the names of actors or actresses that we know we've seen in other shows/films. We would long for an app where we could photograph them and simply have links to their back catalogue appear as search results. Take a combination of visual search and a nicely packaged app and suddenly visual search starts to become incredibly useful.

You could end up with something like...

Now, excuse me whilst I go and listen to Dead Man's Bones. For those that are wondering, yes. Yes, that's Ryan's band.

About Ascento

Ascento learning and development specialise in providing workforce development apprenticeship programmes to both apprenticeship levy paying employers and non levy employers. We work closely with employers to identify the key areas for development and design strategic solutions to tackle these with programmes that are tailored to each individual learner. With two schools of excellence focusing on Management and Digital Marketing we don’t deliver every qualification under the sun, but focus on what we know best and ensure that quality is at the heart of everything we do.